TL;DR: AI-assistant tools and features empower employees in their day-to-day tasks. However, they can extend data utilisation in ways that are not always transparent. This blog explains the new organisational risks that can emerge with AI assistants and best practices when introducing new AI tools in the enterprise.

Enterprise AI assistants help employees with tasks such as information discovery, updating incident tickets, generating summaries, automating communication, and more. Existing enterprise software and SaaS offerings are also integrating AI features and capabilities.

While such tools do bring productivity benefits, they also introduce new security risks due to their close integration with potentially sensitive information.

We recently supported a client team in assessing and rolling out employee AI assistant tooling across the organisation. We were able to assuage concerns about security and compliance risks while helping them identify the best-fit solutions for their requirements.

Understanding How Enterprise AI Assistants Work

The early days of AI saw users engaging with the AI model through a chat interface. However, today we are witnessing the rapid adoption of AI features within enterprise software.

For example, Microsoft Copilot brings generative AI directly into the Microsoft 365 suite, while Google integrates Gemini across Google Workspace. Similarly, Salesforce Einstein enhances CRM workflows, and ServiceNow AI helps automate routine IT tasks. Amazon Q Business integrates with existing enterprise systems to support the automation of daily workflows. Snowflake AI Copilot assists users in generating and refining SQL queries and provides a chat interface for interacting with Snowflake data.

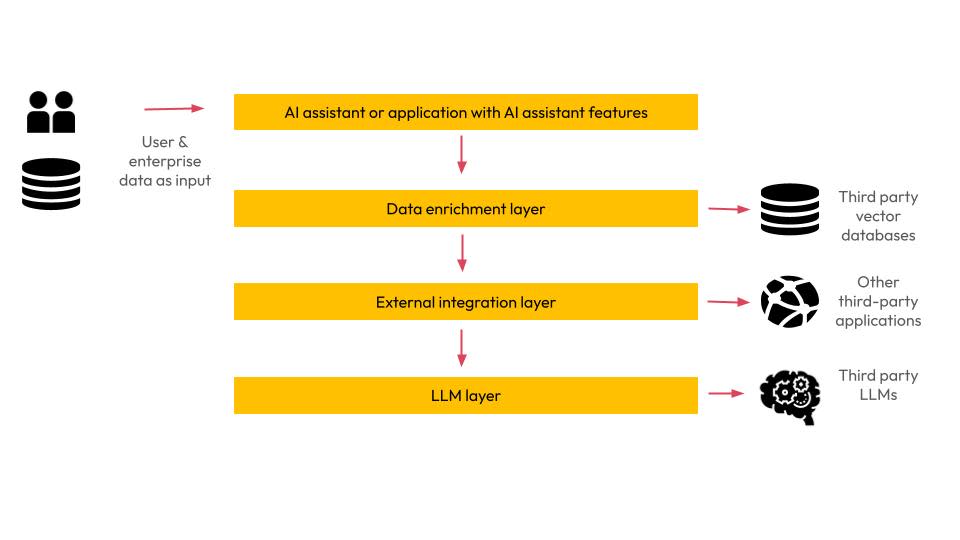

These assistants leverage AI models in ways that are invisible to end users. Any data given to them via user chat or otherwise (text in a document, images in a form, etc.) passes through several underlying technology layers. While each solution may have its nuances, we present a generalised layer structure below that captures common solution patterns.

AI assistant architecture layers

Layer 1 - Core Application

This is the application server where both enterprise and user data are staged for further leverage in AI assistance. Access to this data is paramount for the AI assistant's ability to generate meaningful, context-aware responses.

Layer 2 - Data Enrichment Layer

This layer enriches the initial user inquiry so the AI model can provide the best possible response to the end user. It supplements the user’s query with relevant information pulled from the application itself and, potentially, other connected systems. This enrichment can include:

Past interactions or user history

Metadata such as time, location, or document tags

Application-level signals (e.g., recent clicks, comments, or edits)

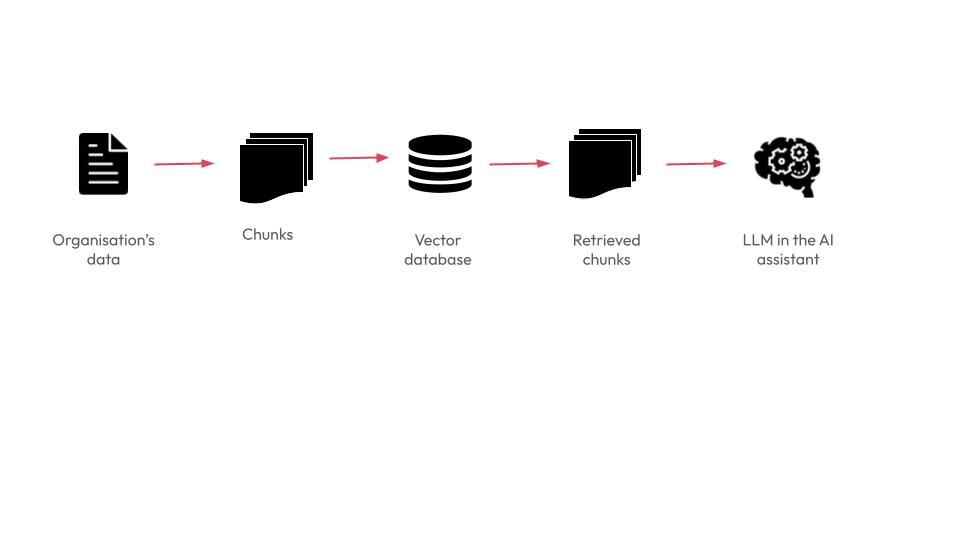

Many enterprise AI assistants also use retrieval-augmented generation (RAG) to improve accuracy. RAG technologies ingest data in real-time from external sources, such as other data repositories or internal documentation. For example, an AI assistant for customer service may automatically pull in data from your product repositories to answer a question about item availability.

RAG commonly involves utilising additional services to intelligently chunk or partition data, convert it into a form that AI can understand (known as vector representation), and then store it in purpose-built data stores called vector databases. Think of a vector database as a hyper-organised filing system. Instead of categorising data into a few folders, you categorise it across thousands of dimensions that the AI can understand and search through instantly.

Vector databases typically reside separately from the core application data store, expanding the surface area to which your data is replicated. They may be self-hosted by the AI assistant solution or may further send your data to other third-party services, such as MongoDB or Neo4j.

RAG in action

Layer 3 - External Integration Layer

Modern AI assistants are evolving with many now capable of autonomous action through integrations with external services. OpenAI first popularised external integrations with their “function calling” feature in 2023. It allows the GPT model to dynamically determine which external services to call and how to format the inputs to best respond to the user request. For example, it can browse the internet to answer complex questions.

External integrations are now being adopted across other LLM providers, with frameworks like LangChain and semantic function calling in Microsoft’s Azure OpenAI also enabling similar patterns. More recently, Anthropic introduced the Model Context Protocol (MCP), an open-source, vendor-neutral standard that allows AI models to interface with a wide range of tools, data sources, and services using a single, consistent API. It effectively acts as a “USB‑C port for AI,” allowing any AI assistant to integrate with a range of third parties.

Layer 3 implements this functionality, allowing your data to be transferred autonomously across other third-party software systems and databases. This may or may not be in your control.

Layer 4 - LLM Layer

The AI model, or large language model (LLM), is the brain of AI assistance. While some enterprise assistants have their own proprietary LLMs, others may send enterprise data to a third-party, such as OpenAI, Anthropic, Mistral, or Deepseek.

Inherent Risks of Using Enterprise Assistants

The above architecture introduces an exhaustive list of concerns; however, we present the ones relevant to most AI assistants below.

Prompt Injections

Carefully crafted prompts could be used to bypass existing access controls and retrieve confidential information beyond an employee’s usual scope of work. This introduces a new layer of cybersecurity risk, compounding traditional threats like employee impersonation.

Multi-Tenant Model Serving

AI models that share computational resources and memory spaces on multi-tenant infrastructure require more stringent security controls than those traditionally available. Otherwise, they may inadvertently expose one organisation's data to another. Malicious actors can also influence LLM responses across tenant boundaries.

Insecure Infrastructure

The rapid adoption of new technologies to support AI workloads can introduce new risks. For example, the vector data store category has rapidly grown to cater for AI workloads, introducing new service providers and offerings in a short span of time. This could mean they are yet to be battle-tested and validated for enterprise production workloads and may not have the necessary security hardening. For example, publicly accessible vector databases may allow anonymous access to the embedding data. Original text can be recovered from the embeddings, effectively nullifying any encryption benefits.

LLM Policies

Third-party LLMs are typically run on a shared, common interface and may not be completely sandboxed from others that interact with them. For example, when an AI assistant makes an API request to the OpenAI GPT 4.1 model, it uses a global endpoint available to anyone with a valid authentication key to interact with.

LLMs may also reuse your data for further model training and AI advancement without your explicit permission. For example, OpenAI's privacy policy states that the Company may store and use your data for its own purposes.

Loss of Data Sovereignty

AI assistance technology is not mature (as of the time of writing), and many technologies in the AI stack have limited geographical coverage. Several may be concentrated over the compute infrastructure in select locations, usually in the US. This raises concerns about data sovereignty.

Regulations such as GDPR or the Australian Privacy Act impose strict requirements on data movement across borders, necessitating clear visibility into how the architecture handles data residency. This includes understanding which components of the AI stack process data in which locations, what data retention policies apply at each layer, and whether appropriate safeguards exist when information traverses jurisdictional boundaries. Without proper sovereignty controls, organisations risk regulatory non-compliance and potential loss of control over sensitive assets.

Other Risks

Some other concerns to address include:

Employees using unauthorised AI tools and technologies increase risks.

Updates to an AI tool could enhance its capabilities, allowing it to bypass security controls restricting the previous version.

Building automated processes and apps over an AI assistant could result in vendor lock-ins that limit flexibility.

The adoption of open-source AI libraries and packages brings security risks, especially given the relative immaturity and rapid growth of these technologies.

The AI shares data autonomously with third-party sources that organisations may not be aware of and are unable to control.

Best Practices when Using AI Assistants

Here are the best practices we recommend to our clients when starting out with enterprise AI assistants.

Develop an AI Governance Strategy

AI governance needs to go beyond internal review boards and meetings to a systemic approach that aligns with both your organisation's values and principles and the relevant laws and regulations. Your governance framework should have:

Policies that outline how AI tools should be integrated and managed across your organisation.

Mechanisms that continuously monitor and evaluate AI systems against the established standards.

The AI governance solution should enable broad control and oversight over AI systems, acting as a responsible promoter and framework for wider AI adoption.

Sandbox Feature Testing

Before rolling out AI features organisation-wide, set up a controlled testing environment. Run a proof-of-concept (prototype) roll-out that showcases the solution and its effectiveness in the workspace. Use fictional or masked data to test new AI tools. AI can assist in generating high-fidelity synthetic data to ensure initial testing is representative of the final usage. This approach enables you to evaluate the functionality and potential vulnerabilities of the technology without exposing your actual data to risk.

Ongoing Monitoring

Keep a close eye on how your organisation’s AI tools evolve over time. It is also essential to remain vigilant for any updates to privacy policies and data handling practices in existing tools. Most AI assistant features have a disable option. Organisations should consider documenting a “turn-off protocol” that allows teams to quickly disable any feature or tool if it starts to pose a risk.

Buying vs. Building Your AI Assistant

With a greater understanding of the risks involved, the question arises as to whether to build an internal AI assistant tool from the ground up or utilise off-the-shelf, integrated AI assistance.

For in-house projects, an open-source LLM can be deployed on your existing infrastructure, and your RAG workflows and external integrations can be set up in a way that you know and control where and how your data flows and is used. You can keep internal control of the entire above-mentioned architecture.

However, any AI development initiative at enterprise scale requires the following:

Data and Engineering Maturity

Foundational data and engineering maturity are key aspects of AI readiness. For example, AI projects may require data standardisation and integration across several data sources, along with mature data validation and testing pipelines. Organisations also require mature DevOps environments that can support model versioning and new AI performance and infrastructure requirements.

Costs

You also need to consider both development and ongoing maintenance costs. Ensuring the reliability of your AI assistant solution is complex due to the inherent non-deterministic nature of AI and can lead to escalated IT operations and maintenance costs. Significant effort is required to establish evaluation and quality mechanisms that enhance user trust and adoption.

Adaptability

Another thing to keep in mind is that AI technologies are evolving rapidly, and any adoption you make now may be superseded quickly. LLM models and the accompanying tooling are both changing rapidly. This presents both a risk and an opportunity. Each comes with trade-offs, either in terms of time and effort, risk exposures or control levers.

Conclusion

Enterprise AI assistants are a powerful productivity tool that empowers employees, but they also introduce new challenges in enterprise data management. The inherent design of AI tools and features creates new, uncontrolled data movement to third parties that the organisation may be unaware of and not authorise.

While it is essential to remain aware of new risks, they should not hinder the adoption and innovation of AI. AI governance, testing, and monitoring enable organisations to implement the necessary safeguards without limiting AI traction.

Organisations that are risk-averse but keen to get started with AI are best served through strategic partnerships with experts who can assist them in every step of their implementation journey.

Contact V2 AI to discuss safe and accelerated AI adoption in your organisation.