TL;DR: The financial sector faces unique challenges in digital innovation due to stringent government regulations and complex compliance requirements that can slow down new software development. This blog explores how AI can accelerate time-to-value for financial institutions while ensuring alignment with key regulatory standards and compliance requirements.

When planning new business initiatives, there are many considerations at play. Balancing security along with the other non-functional requirements, together with the functional user needs, and fitting into the allocated budget is always a juggling act.

For example, at a recent client engagement at a leading Australian bank, we observed that security consultants were brought in halfway through the development process. The consultants identified missing compliance requirements and features, which necessitated a rebuild and resulted in a delay of several weeks in project delivery.

Today's AI models can help solve such challenges. Investing the time in a security-aware assistant for your organisation is a good place to start, as you can get early warning on areas around risk posture that need attention, and you can plan for compliance in advance.

We suggest an AI security assistant solution that analyses input documents, such as design documents, statements of requirements, and user stories, for any new initiative. It presents early recommendations for full compliance and, as artefacts are produced, provides more context for better recommendations throughout the project.

The business outcomes for implementing the security assistant include:

Faster time to value, as diverse stakeholders can quickly find all the compliance recommendations and requirements a project requires

Lower communication overheads between developers, architects, internal security consultants, and other compliance stakeholders.

Reduced capital expenditure due to more efficient software development.

Reduced risks and increased innovation capabilities within the financial organisation.

Why Use AI to Cover the Security-Design Gap

Any new digital initiative within a financial organisation requires complex approval processes to get underway. Security requirements and standards are scattered across disparate document stores, necessitating significant research to uncover and understand what is applicable to the project.

For example, requirements related to logging, authentication, encryption, and network security may be listed under different checklists or by subject areas, requiring software architects to sift through and identify what is relevant to their project. They also have to identify how the controls are to be implemented at the database, application, and infrastructure levels to meet the necessary compliance requirements.

Developers and architects may spend weeks conducting research and liaising further with security and compliance teams to obtain approval for new architectures or customer-facing tools. Missed or incorrectly implemented controls can push the project into a review update cycle that causes further delays and missed opportunities.

The Proposed Solution - an AI Security Advisor

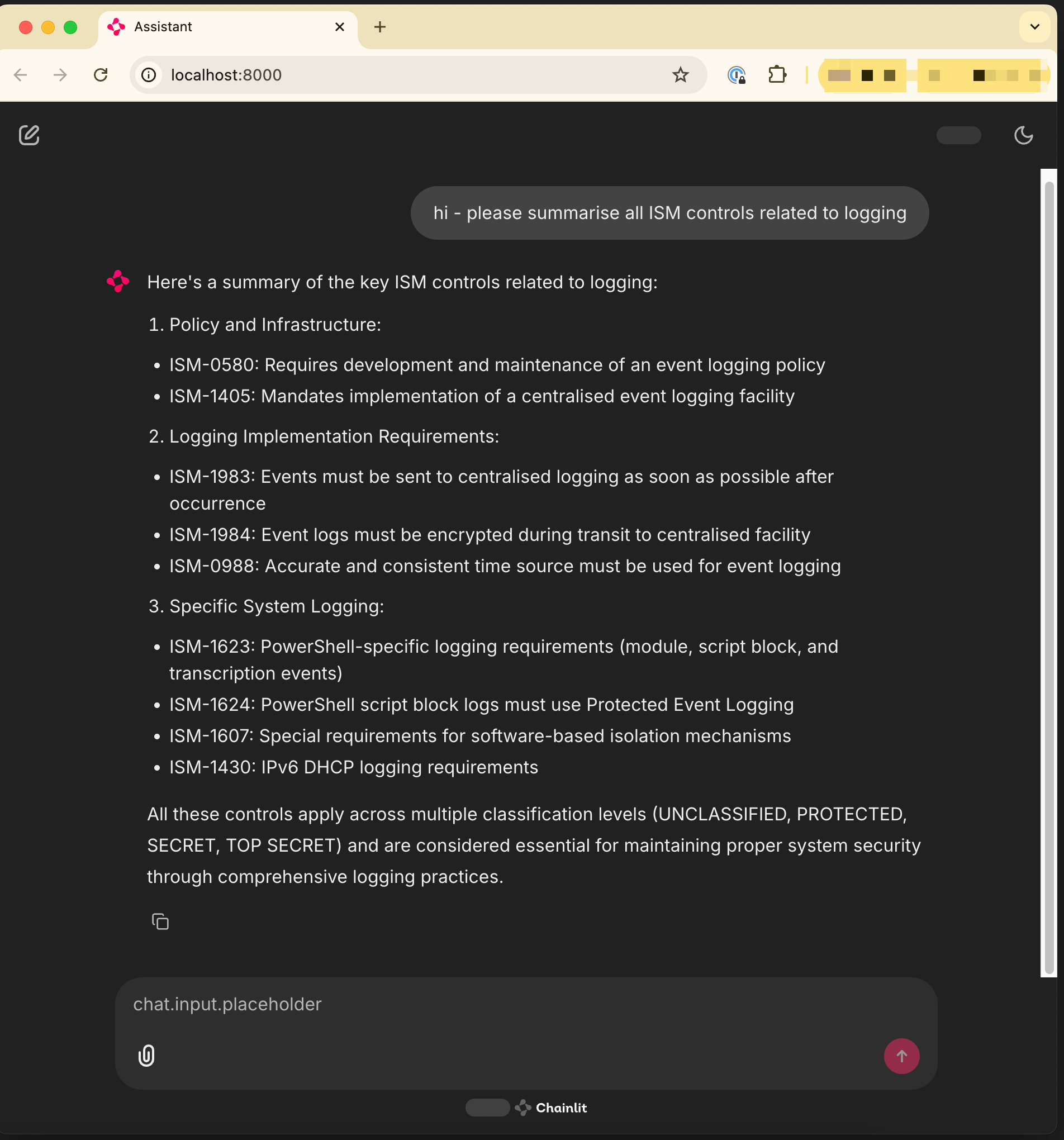

The “Secure Design Advisor” is an AI assistant that reviews technical designs (such as architecture diagrams and cloud configurations) and highlights gaps or compliance issues based on the bank’s policies. The user can have a natural language conversation with the assistant to identify requirements in minutes.

The AI assistant has access to information such as:

Internal technical standards (e.g., password policies, encryption standards)

Regulatory guidelines (APRA prudential standards, GDPR for privacy, etc.)

Cloud architecture blueprints (AWS/Azure best practices specific to the organisation).

It analyses any architectural design document against this existing information and provides accurate, relevant, and contextual recommendations. It cites the exact policy document from which the requirement originates for traceability in future audits. It can also clarify the user request with additional questions as needed.

Benefits of the AI Security Advisor in Financial Institutions

The AI-powered “Secure Design Advisor” solves this problem by presenting the required information within the project context. It accelerates project timelines by embedding compliance into the workflow, not tacking it on at the end.

Consider the following benefits for different organisational roles:

Internal Security Consultants

An AI advisor supplements the expertise of your security team, handling routine checks and mapping each recommendation to a known control. They can validate architectures much faster, as the AI summarises information and highlights gaps. This eliminates process bottlenecks while ensuring a human review layer for added trust.

External Auditors

Every AI-generated suggestion comes with a citation to an internal policy (evidence on the spot). This reduces the burden of proof during audits, since design decisions are automatically documented with rationale.

Architects and Engineers

The AI solution streamlines their work and reduces communication overheads. Instead of waiting days for a compliance review document, they receive instant assistance and useful tips, such as: “Your design lacks data masking for production data copy – consider using XYZ service per our standard.”

AI Solution Architecture

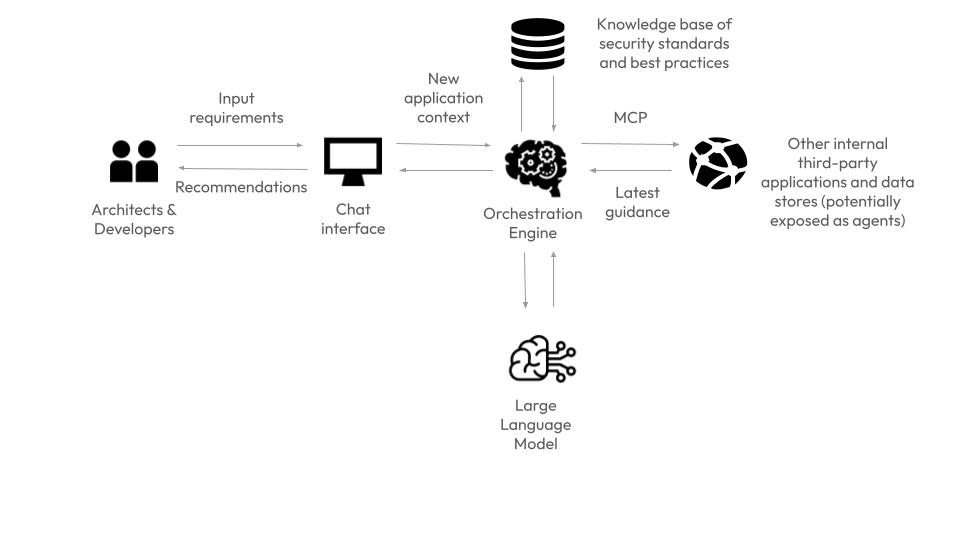

The AI-assistant would have the following components:

Chat Interface

This layer allows the user to communicate with the solution. It could be a stand-alone application or a chat-based interface integrated into the company’s collaboration suite, like Teams or Slack. Architects can upload a design document or type in questions, and the AI solution responds with suggestions, flagged risks, and links to source documents for more information.

Large Language Model

A large language model (LLM) is at the core of the solution. It analyses all the data and performs the reasoning necessary for the solution to work, and communicates with supporting agents to work through the task at hand. Make sure you choose a hosted model inference service that keeps your data private and runs within the geographic boundaries you operate in, like those hosted in AWS Bedrock or Azure AI Studio. You can also self-deploy open source LLMs in-house for full control if required, but this is not recommended initially during early iterations, where you need to focus on proving out the solution.

Enterprise Knowledge Bases

Identify all existing systems containing relevant data, like:

Policies and controls found in information security manuals and standards, compliance checklists, and APRA guidance PDFs.

Standardised technology blueprints and patterns found in internal architecture guides, approved solution designs, and secure coding guidelines.

Historical design review data found in past security review reports, FAQ documents on common issues, and how they were resolved.

All of this information must be indexed into a vector database, a specialised database that the AI solution can read from and understand complex relationships between relevant pieces of information. Internally, the AI takes user input, identifies relevant keywords, and then retrieves information related to those keywords from the vector database. For example, if the design involves storing customer data in an Amazon S3 bucket, the AI solution might retrieve the “data encryption standard” document and find the relevant bucket's "at-rest" encryption configuration requirements, along with encryption in transit requirements for other aspects of the solution.

External and Internal APIs

Not all required security information may be present in internal knowledge stores. Some information may need to be retrieved from publicly available databases via third-party APIs. Key data may also be locked in internal applications and exposed via APIs. The AI solution can utilise Model Context Protocol (MCP) as an emerging open standard to communicate with other software systems and access the data it needs.

If your organisation uses a “policies as code” approach, such as NIST's OSCAL (Open Security Controls Assessment Language), you can also connect the AI system with existing tooling via MCP.

Orchestration Engine

This layer will collate all the required data and feed it to the LLM. It will combine user input with relevant documents and data from third parties and feed it to the LLM as a well-designed prompt. It essentially ensures the LLM is “shown” the relevant internal content only; this is the key to mitigating hallucination and keeping data flow secure.

Building a Trustworthy AI Security Assistant

Given that the AI assistant provides security recommendations for future IT initiatives, efforts must be taken to ensure it is securely deployed and performs well within the responsible AI framework. All architecture components should reside in a secure network environment. If deployed on-premises, ensure the infrastructure that hosts your LLM is locked down as per any potentially sensitive database. Consider encryption techniques for embeddings if data sensitivity is high.

Other safeguards to consider include:

Role-based access control (RBAC) to ensure only authorised users can access the assistant.

Content filtering that blocks harmful or inappropriate content in AI model inputs and outputs.

Topic restrictions so it does not respond to topics outside of security recommendations.

Contextual grounding checks that detect and filter AI hallucinations against the given reference source.

Testing and monitoring for ongoing compliance.

Always keep a human in the loop to approve or reject any AI recommendations.

These guardrails are often configurable within a cloud-hosted AI platform but may require additional tooling if implemented on-premises.

Conclusion

By combining human expertise with GenAI’s speed, banks and insurers can innovate faster without fear, delivering value to customers while confidently meeting every compliance checkmark.

At V2 AI, our team has made several working demos on different GenAI solutions, including the AI security advisor and an AI conversational analytics tool. Contact us for assistance with implementing AI use cases that bring new efficiencies to your organisation.