TL;DR: As financial organisations move to embed AI agents in critical business processes, a key question arises. How can agents access the necessary information while still adhering to existing governance and access controls? Beyond authentication, you need granular authorisation - so you can ensure least privilege access for agents at scale without disrupting existing processes. This blog explores one approach to granular authorisation that empowers agents to perform necessary tasks without overstepping compliance boundaries.

In a previous engagement with a leading financial institution, we were building AI agents that informed the institution’s financial product sales process. We had multiple agents equipped with subject matter expertise in product information, competitors, and other personalised customer insights, collaborating to provide concise and timely product recommendations to customers.

The solution provided the following measurable business outcomes for our client:

Sales efficiency by autonomously curating data and providing key product information and analytics to empower decision-making for both customers and advisers.

Sales cycle acceleration by empowering advisers to provide consistent, transparent, and tailored product information to customers.

Workflow optimisation by reducing communication overheads and information hand-offs between advisers and customers.

A challenge that arose was ensuring that agents only access filtered views of the same information. For instance, agents interacting with one customer should not inadvertently leak information about another customer, despite hitting the same tool where information of both customers is stored.

We also wanted to limit agent actions based on agent type. For example, the insights agent could only read customer activity history, while the onboarding agent could dynamically update the data once the customer signed up for a product.

Challenges with Traditional Access Control Mechanisms

Unfortunately, the problem cannot be trivially solved by assigning human identities and access controls to agents. AI agents are software components and interact with other software systems through code-based interactions such as over model context protocol (MCP), API calls using API keys, and other system interactions. Traditional authentication and authorisation methods, designed for humans, prove inadequate for the following reasons.

Intent Assumptions

Human users are typically authorised with permissions for roles that remain static for weeks and months, if not years. Traditional software applications (for example, in traditional robotic process automation) also have fixed authorisation levels because of the well-defined scope of tasks they perform.

However, AI agents require adaptive mechanisms with permissions that can change dynamically in response to evolving intent, driven by changes in operational contexts, risk levels, mission objectives, or real-time data analysis. Contextual and behavioural factors should influence access decisions more than static attributes.

Trust Assumptions

Traditional systems assume that once authenticated, the human remains trustworthy throughout the session. However, AI agents introduce complexities such as potential adversarial attack attempts and internal misjudgment or incorrect reasoning. Broad, persistent privileges increase security risks. Continuous validation is a more secure approach than session-based authorisation.

Benefits of Context-Aware Authentication and Authorisation

AI agents require short-lived, context-aware authorisation delegations tailored to their current task and operational scope. The principle of least privilege can be upheld by granting

Only the minimum necessary permissions for their current operation.

Only the minimum necessary access to the exact data needed to perform the current operation.

The benefits of this approach include

Improved Compliance

Context-aware authentication and authorisation improve audit trails and accountability. You have the necessary logging data to investigate why your agents accessed a particular system and what data they read or modified. Granular tracking creates clear relationships between business purposes and permissions, simplifying forensic analysis.

Efficiency

It reduces the need for centralised agent identity and access management, which gets difficult to scale as your agent ecosystem grows. Instead, the context is set by your business rules, which can be managed at the data level instead of at the agent level. Your security policies can evolve without requiring massive credential rotation efforts.

Cost Management

The solution also aligns well with modern cloud-native architectures, where services (and associated agents) are constantly scaling up and down. You can orchestrate agents, spinning them on and off as needed, without worrying about permissions. Your agents can only run as long as necessary, allowing you to maximise your AI budget.

Implementing Context-Aware Authentication and Authorisation

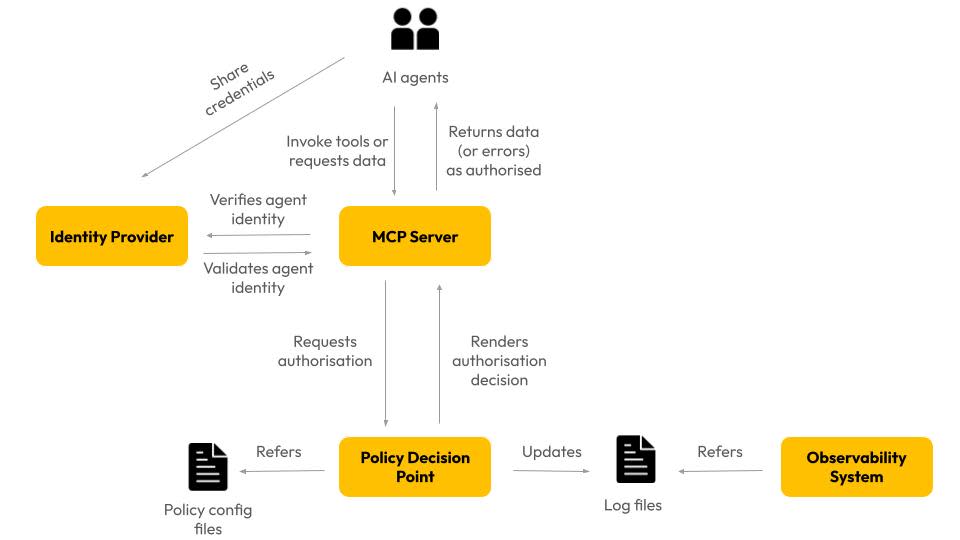

Our solution has the following components

Identity Provider

Identity providers authenticate the agent. They enable agents to verify their identity by exchanging credentials. The agent receives a shareable cryptographic proof that it can further use as proof of identity when interacting with APIs or MCP servers.

Policy Decision Point

This is the solution component that makes authorisation decisions once agent identity has been verified. It refers to policy configuration files where you can set what the agent can or cannot do and access based on its role.

The agent raises a request when it wants to complete a specific task, like accessing a document or updating some information. The PDP evaluates the context of the request and can:

Make a Boolean decision, such as "yes, this user can access this document" or "no, this agent cannot recommend this product."

Provide conditional approval, such as “this agent can access only the documents with PII flags” or “this agent can access only those belonging to a specific customer.”

Policy Enforcement Point

The traditional “backend,” such as a REST API or MCP server, that invokes and enforces the PDP decision. For example, it may return a 403 for unauthorised actions to the agent or return requested data for authorised actions.

How It Works

A user interacts with the company’s AI agent to request information.

The agent completes authentication with the identity provider.

The agent invokes the tools relevant to the request.

The MCP server authenticates the agent by cross-checking with the identity provider.

The MCP server invokes the PDP to perform an authorisation check in the context of the request.

The PDP renders a decision and logs it for audit purposes.

The PDP returns the decision to the MCP server.

The MCP server enforces the decision, allowing or disallowing the attempted action by the agent, or returning data the agent requested, limited by the authorisation decision made by the PDP.

The agent thus acts within the auditable, verifiable bounds set by the authorisation framework.

Conclusion

Unlocking the value of agentic AI in finance depends on a zero-trust software architecture. Strict guardrails and an audit trail for software, security and business stakeholders are table stakes. Simple coarse-grained role-based access controls remain limited in their efficacy, limiting the AI benefits you can realise

Context-aware authentication and authorisation allow agents the freedom to take autonomous decisions to complete tasks while still ensuring they operate within pre-determined guardrails.