TL;DR: Threat actors have started using AI to launch more attacks at unprecedented speed and scale. Capabilities that once required time, skill, and coordination are now being industrialised. This article explores various strategies blue teams can use to counteract modern-day AI insurgence.

The window between vulnerability discovery and exploitation has collapsed. What used to take days or hours now takes minutes. According to CrowdStrike’s latest Global Threat Report, the average time for an adversary to start moving laterally across a network has fallen to just 48 minutes, with the fastest observed at 51 seconds. Attack activity has also surged, with targeted industries experiencing 200-300% more attacks in the last 12 months.

At V2 AI, we recently hosted a debate in Melbourne to an audience of 300 on how AI is reshaping the balance of power in cybersecurity. The question at the centre: Are AI attacks really as insurmountable and impossible to defend as the media claims them to be?

The debate proved otherwise! The blue team won, with several arguments demonstrating that disciplined, often traditional, defence strategies can effectively neutralise many of the perceived advantages AI gives attackers.

After all, for every high-profile AI attack that makes the news, there are millions of attempts that enterprise blue teams counter daily that no one talks about.

Some learnings from the debate are shared below.

Implement a Layered Defence Mechanism

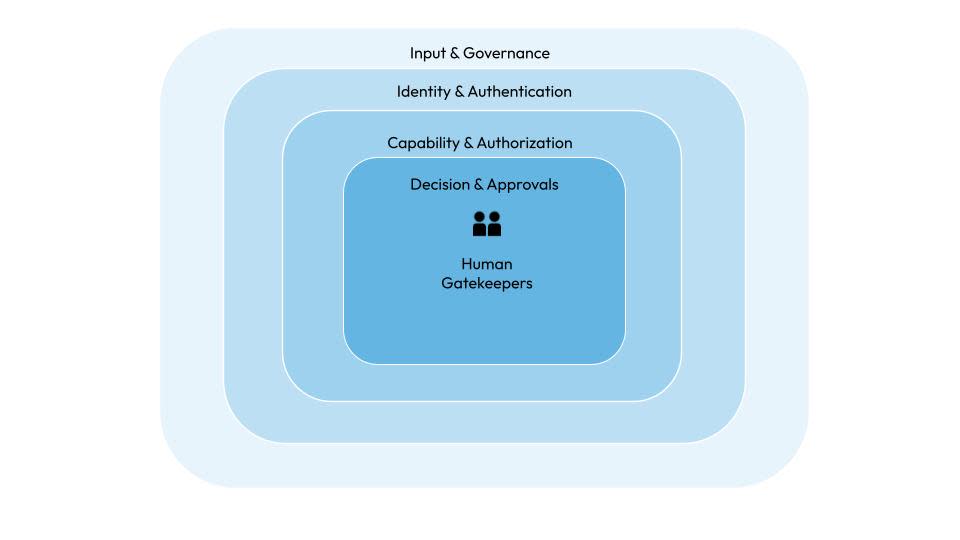

Your AI system design should assume it will fail, be manipulated, or behave unexpectedly at some point. The goal of layered defence is to ensure that when this happens, the impact is tightly contained.

AI models may seem inherently vulnerable due to the proliferation of prompt injection attack attempts. Yes, there are infinite ways adversaries can manipulate models, from talking about a grandmother who passed away to asking it to write a poem that leaks secrets.

The solution lies in creating a layered defence strategy.

Choose the Right Model

First, it is important to choose the right model. There are over 2 million models on HuggingFace, and some have more vulnerabilities than others. Choose enterprise-grade models with built-in guardrails.

Validate that the model is what it claims to be using hash verification and signed releases.

Refuse vulnerable or unverified models the same way you would block unpatched libraries.

Choose smaller, purpose-built models where possible, as they reduce risk by limiting the scope of what the AI system can reason about or act upon.

Even then, always use synthetic data when fine-tuning or training. Verify integrity at every stage, training, packaging, deployment, and runtime.

Rethink Identity for Autonomous Agents

Traditional Web 2.0 security models assume software behaves deterministically once authenticated. Instead, blue teams should consider identity providers offering agent identities, where each agent has its own credentials, policies, and audit trail. You can use this to provide fine-grained authorisation with continuous validation. It also allows rapid revocation if an agent is compromised.

Least Privilege Is Non-Negotiable

AI agents should never inherit broad or standing permissions. Instead, grant only the minimum access required for the task at hand, scoped to a specific action, dataset, and time window. Customer support agents, for example, should only see the records of the customer they are actively assisting, not the entire customer database. Privileges should expire automatically once the task completes.

Control Tool Access as Tightly as Data Access

Many AI breaches do not occur at the model layer, but through the tools agents can call. API keys and refresh tokens stored on disk, overly permissive service accounts, or long-lived credentials dramatically increase risk. Ensure tool access is controlled through agent identity systems.

Defend Against Privilege Escalation

Attackers increasingly attempt to manipulate agents into expanding their own authority. This could be done through task chaining, goal manipulation, or exploiting ambiguous instructions.

Ensure your permissions are not static or self-modifiable. Your policy enforcement layer should prevent agents from requesting new privileges without human approval.

Introduce Human Gates for Critical Decision Points

Every agent action need not be fully autonomous. High-impact operations, such as payments, customer data changes, production deployments, or security policy updates, should pass through human-in-the-loop approval. This can be implemented as progressive autonomy. Agents start with read-only or advisory roles and earn expanded permissions as their confidence grows.

Key lesson: Prompt injection manipulates conversation, not capability. Enforcing safeguards within capability layers is the best approach to a comprehensive AI defence.

Re-Skill the Human Layer

Every AI system ultimately reflects human intent. It can amplify both weaknesses and strengths at machine speed.

Attackers are exploiting human shortcuts, particularly as AI-generated code proliferates. Cybersecurity becomes more challenging in the context of:

Vibe coding without thinking about software best practices

Lazy approvals

Vague/poorly designed prompts

Blind trust in AI outputs

Poor operational discipline

Educate Your Employees

All business units and teams interact with AI systems and AI-generated content. Each decision point becomes an opportunity for control or compromise. Employees should be trained to pause, verify, and escalate.

Raise awareness about modern phishing attempts like voice clones, deepfake video calls, and real-time impersonation. Similarly, educate on the risks of “shadow AI” - using unapproved AI tools or passing confidential information to AI systems.

Sophisticated deception is no longer limited to nation-state actors. This is cybercrime moving closer to street-level scale. Education remains a key security mechanism.

Evolve Your Processes

The defence has now shifted from recognising deception to making it operationally irrelevant. Even if an employee is blackmailed or compromised, organisational checks and balances can limit damage.

For example, consider implementing the following organisation-wide:

High-risk actions, like financial transfers, require multi-party, multi-channel verification.

Everyone uses cryptographic identities (passwords and biometrics) for any organisational work.

Out-of-band verification is a standard process -e.g. if a request comes via email, you double-check with the individual via Slack.

One-time credentials are integral to critical business processes

Well-designed processes interrupt fraud chains.

Key lesson: Human behaviour is now part of the attack surface. AI models are not built to understand human psychology, organisational politics, or fatigue. However, these factors play a major role in how AI is deployed and trusted.

Final words

AI hasn’t shifted the balance in favour of attackers. It has elevated the advantage of the most organised teams.

You cannot secure everything equally in an AI-heavy environment. But you can:

Map external assets continuously

Identify which models, agents, and data flows truly matter

Apply the strongest controls there first

AI demands discipline. The organisations succeeding are the ones applying proven security principles more systematically. AI security tooling, combined with the right processes, is tilting the advantage back toward defenders.